In the 1960s, the American Standard Code for Information Interchange, known as ASCII, became a standard for encoding characters on an 8-bit system.

This encoding system allowed for a uniform way to represent text and control characters across different devices and platforms, facilitating the growth of digital communication.

In this article, we will explore the American Standard Code for Information Interchange (ASCII).

What is ASCII?

ASCII, pronounced “ask-ee,” is an acronym for the American Standard Code for Information Interchange.

It is a widely used character encoding standard designed to represent text in computers, telecommunications equipment, and a variety of other devices that use text.

ASCII codes are essential in the digital world as they represent text in computers, communications equipment, and other devices that rely on textual data.

By providing a consistent way to encode characters, ASCII has become a foundational element in the field of digital communication and data processing.

History of ASCII

ASCII was developed from telegraph code, building on the need for a standardized method of encoding text for electronic communication.

The first version of ASCII was released in 1963, marking a significant milestone in the history of computing.

It underwent an update in 1967 to refine and expand its capabilities. Since its inception, ASCII has been the most common character encoding standard globally, utilized by computers and a wide array of electronic devices to represent text and control characters.

Originally, ASCII was a 7-bit code, which allowed for 128 unique characters. This set included uppercase and lowercase letters, numerals, punctuation marks, and various control characters essential for text formatting and data transmission.

As technology evolved and the need for more characters became apparent, ASCII was extended to an 8-bit code.

This extension expanded the character set to 256 unique characters, incorporating additional symbols, special characters, and accented letters used in various languages, thereby enhancing its versatility and utility in a more interconnected digital world.

How does ASCII Work?

Each ASCII code corresponds to a specific number, ranging from 0 to 127 for the original 7-bit ASCII, and from 0 to 255 for the extended 8-bit ASCII.

These numerical codes are then converted into binary code, which is the language computers use to process and store data.

This binary representation is crucial because computers operate using a binary system of ones and zeros.

For example, the ASCII code for the uppercase letter “A” is 65. When converted to binary, 65 becomes 01000001.

This binary sequence is what the computer uses to identify and display the letter “A” on your screen or store it in memory.

Each character, symbol, or control code has a unique ASCII number, which can be similarly converted to a binary sequence.

This system ensures that text and data can be consistently encoded, transmitted, and interpreted across different computers and devices, maintaining uniformity and compatibility in digital communications.

Importance of ASCII

ASCII’s importance lies in its universality and simplicity. Before ASCII, different computers and devices used various methods to represent text, which made communication between them difficult.

ASCII provided a common language that all devices could understand, paving the way for the development of the internet and other communication technologies.

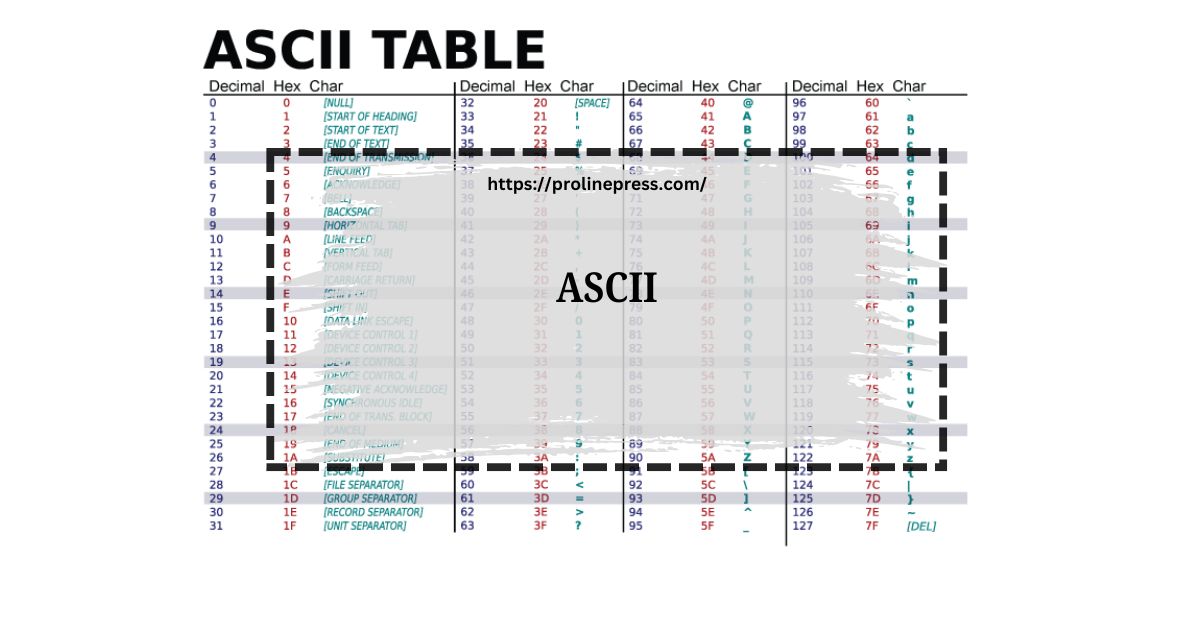

ASCII Table

The ASCII table is a chart that maps characters to their corresponding ASCII codes. Here is a simplified version of the ASCII table:

| Character | ASCII Code | Binary |

| A | 65 | 01000001 |

| B | 66 | 01000010 |

| C | 67 | 01000011 |

| … | … | … |

| a | 97 | 01100001 |

| b | 98 | 01100010 |

| c | 99 | 01100011 |

| … | … | … |

| 0 | 48 | 00110000 |

| 1 | 49 | 00110001 |

| 2 | 50 | 00110010 |

| … | … | … |

Extended ASCII

The original ASCII standard was a 7-bit code, which allowed for 128 unique characters. However, as computing technology evolved, the need for more characters became apparent.

Extended ASCII was developed to address this need, using an 8-bit code to provide 256 unique characters.

This extension included additional symbols, punctuation marks, and special characters used in various languages.

ASCII and Unicode

While ASCII was the standard for many years, the limitations of a 256-character set became apparent as computing became more global.

Unicode was developed to address these limitations, providing a much larger character set that includes characters from virtually every written language in the world.

Despite the rise of Unicode, ASCII remains important because the first 128 characters of Unicode are identical to the original ASCII characters, ensuring backward compatibility.

Uses of ASCII

Text Files

ASCII is commonly used in text files. These files, often with extensions like .txt, contain plain text without any formatting. This makes them small and easy to read by virtually any text editor.

Programming

In programming, ASCII codes are often used to manipulate text. Many programming languages include functions to convert characters to their ASCII codes and vice versa.

For example, in Python, the ord() function returns the ASCII code of a character, and the chr() function returns the character corresponding to an ASCII code.

Data Transmission

ASCII is used in data transmission, especially in older technologies like teletypes and early internet protocols.

Even today, many internet protocols use ASCII for data transmission because it is simple and universally supported.

The Role of ASCII in Modern Computing

Internet Protocols

Many internet protocols, including HTTP (the protocol used for web browsing) and SMTP (the protocol used for email), use ASCII to encode their control commands.

This ensures that these protocols can be used on virtually any device, regardless of its underlying hardware or software.

Software Development

In software development, ASCII is often used for string manipulation and processing. Many programming languages provide built-in support for ASCII, making it easy for developers to work with text data.

Education

ASCII is also an important educational tool. Learning about ASCII helps students understand the basics of how computers process and store text data.

It also introduces them to the concept of character encoding, which is fundamental to many aspects of computer science.

ASCII Art

ASCII art is a graphic design technique that utilizes characters from the ASCII standard to craft images.

This form of art became popular in the early days of computing when graphics capabilities were limited.

Even today, ASCII art remains a popular way to create simple and creative images using text.

Challenges with ASCII

Despite its many advantages, ASCII has some limitations. One of the biggest challenges is its limited character set, which does not support many characters used in non-English languages.

This limitation led to the development of other character encoding standards, such as Unicode, which can represent a much larger range of characters.

The Future of ASCII

While Unicode has largely replaced ASCII in many applications, ASCII remains relevant due to its simplicity and widespread support.

It is likely to continue to be used in applications where simplicity and compatibility are more important than a large character set.

FAQs

1. How many characters are in the original ASCII standard?

The original ASCII standard includes 128 characters.

2. What is the difference between ASCII and Unicode?

ASCII is a 7-bit or 8-bit character encoding standard, while Unicode is a much larger character encoding standard that includes characters from virtually every written language.

3. What is extended ASCII?

Extended ASCII is an 8-bit character encoding standard that includes 256 characters, providing additional symbols, punctuation marks, and special characters.

4. What are some limitations of ASCII?

One limitation of ASCII is its limited character set, which does not support many characters used in non-English languages.

Conclusion

ASCII, pronounced “ask-ee,” stands for American Standard Code for Information Interchange and has played a foundational role in the development of digital communication.

Developed from telegraph code and first released in 1963, ASCII provided a standardized method to encode text, facilitating consistent communication across various devices. Initially a 7-bit code, it was later expanded to an 8-bit code to accommodate more characters,by converting numerical codes to binary, ASCII enables computers to process and store text efficiently.

Despite newer encoding standards like Unicode, ASCII’s simplicity and universality ensure its ongoing relevance in the digital world.